-

We’re removing some of the restrictions in Email Routing so that AI Agents and task automation can better handle email workflows, including how Workers can reply to incoming emails.

It's now possible to keep a threaded email conversation with an Email Worker script as long as:

- The incoming email has to have valid DMARC ↗.

- The email can only be replied to once in the same

EmailMessageevent. - The recipient in the reply must match the incoming sender.

- The outgoing sender domain must match the same domain that received the email.

- Every time an email passes through Email Routing or another MTA, an entry is added to the

Referenceslist. We stop accepting replies to emails with more than 100Referencesentries to prevent abuse or accidental loops.

Here's an example of a Worker responding to Emails using a Workers AI model:

AI model responding to emails import PostalMime from "postal-mime";import {createMimeMessage} from "mimetext"import { EmailMessage } from "cloudflare:email";export default {async email(message, env, ctx) {const email = await PostalMime.parse(message.raw)const res = await env.AI.run('@cf/meta/llama-2-7b-chat-fp16', {messages: [{role: "user",content: email.text ?? ''}]})// message-id is generated by mimetextconst response = createMimeMessage()response.setHeader("In-Reply-To", message.headers.get("Message-ID")!);response.setSender("agent@example.com");response.setRecipient(message.from);response.setSubject("Llama response");response.addMessage({contentType: 'text/plain',data: res instanceof ReadableStream ? await new Response(res).text() : res.response!})const replyMessage = new EmailMessage("<email>", message.from, response.asRaw());await message.reply(replyMessage)}} satisfies ExportedHandler<Env>;See Reply to emails from Workers for more information.

-

You can now access environment variables and secrets on

process.envwhen using thenodejs_compatcompatability flag.const apiClient = ApiClient.new({ apiKey: process.env.API_KEY });const LOG_LEVEL = process.env.LOG_LEVEL || "info";In Node.js, environment variables are exposed via the global

process.envobject. Some libraries assume that this object will be populated, and many developers may be used to accessing variables in this way.Previously, the

process.envobject was always empty unless written to in Worker code. This could cause unexpected errors or friction when developing Workers using code previously written for Node.js.Now, environment variables, secrets, and version metadata can all be accessed on

process.env.To opt-in to the new

process.envbehaviour now, add thenodejs_compat_populate_process_envcompatibility flag to yourwrangler.jsonconfiguration:{// Rest of your configuration// Add "nodejs_compat_populate_process_env" to your compatibility_flags array"compatibility_flags": ["nodejs_compat", "nodejs_compat_populate_process_env"],// Rest of your configurationcompatibility_flags = [ "nodejs_compat", "nodejs_compat_populate_process_env" ]After April 1, 2025, populating

process.envwill become the default behavior when bothnodejs_compatis enabled and your Worker'scompatability_dateis after "2025-04-01".

-

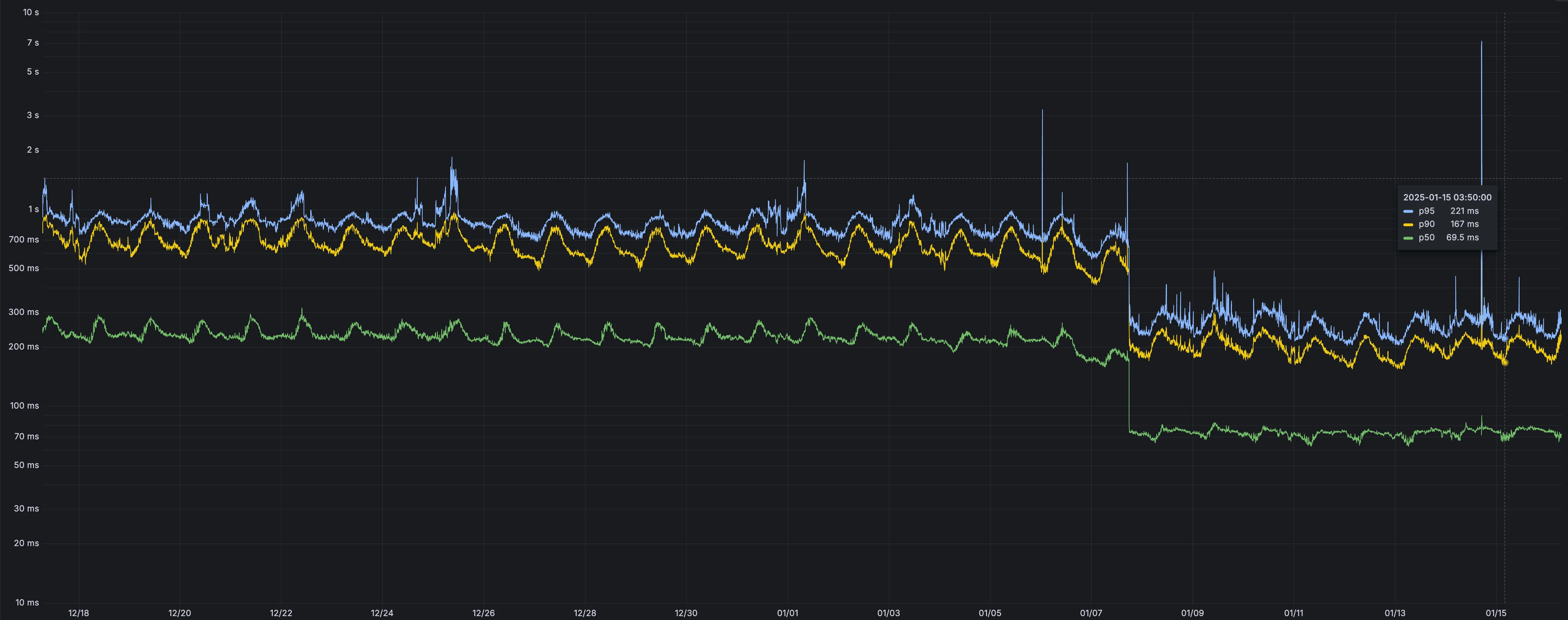

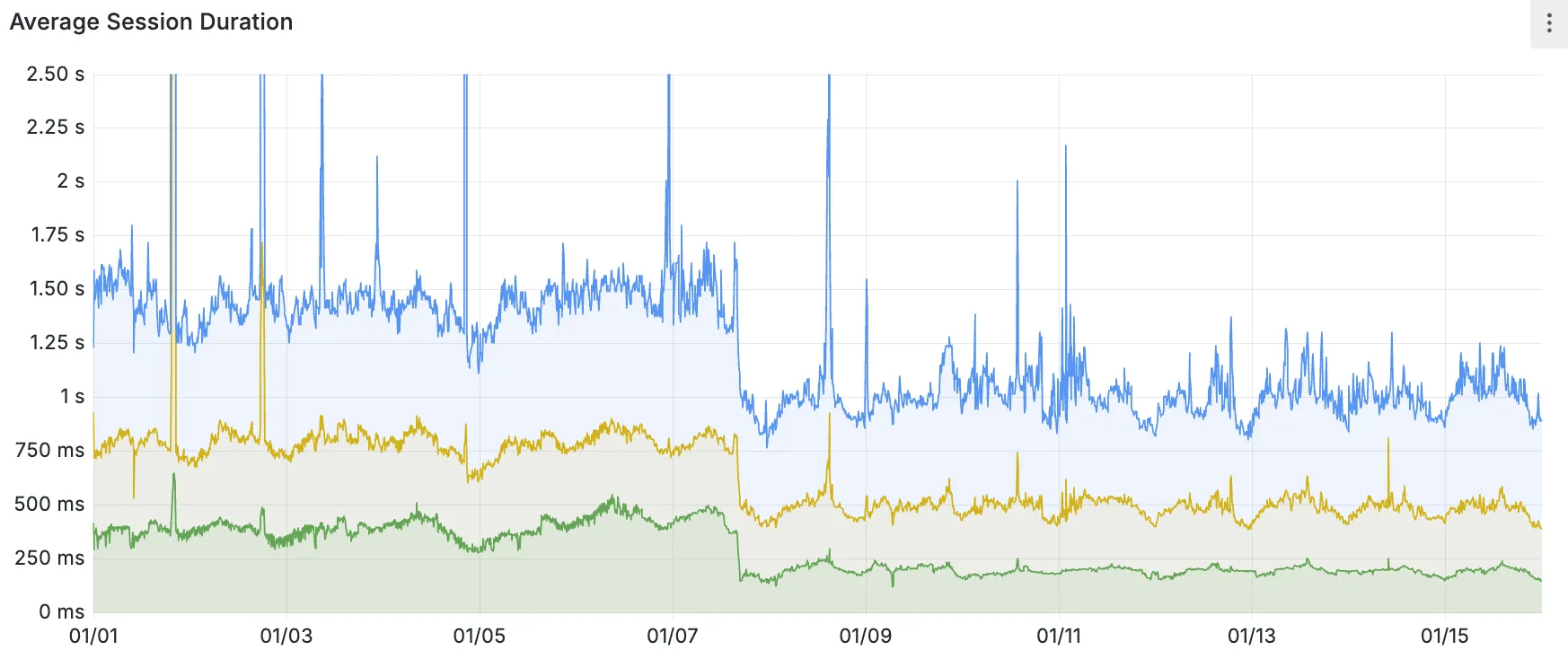

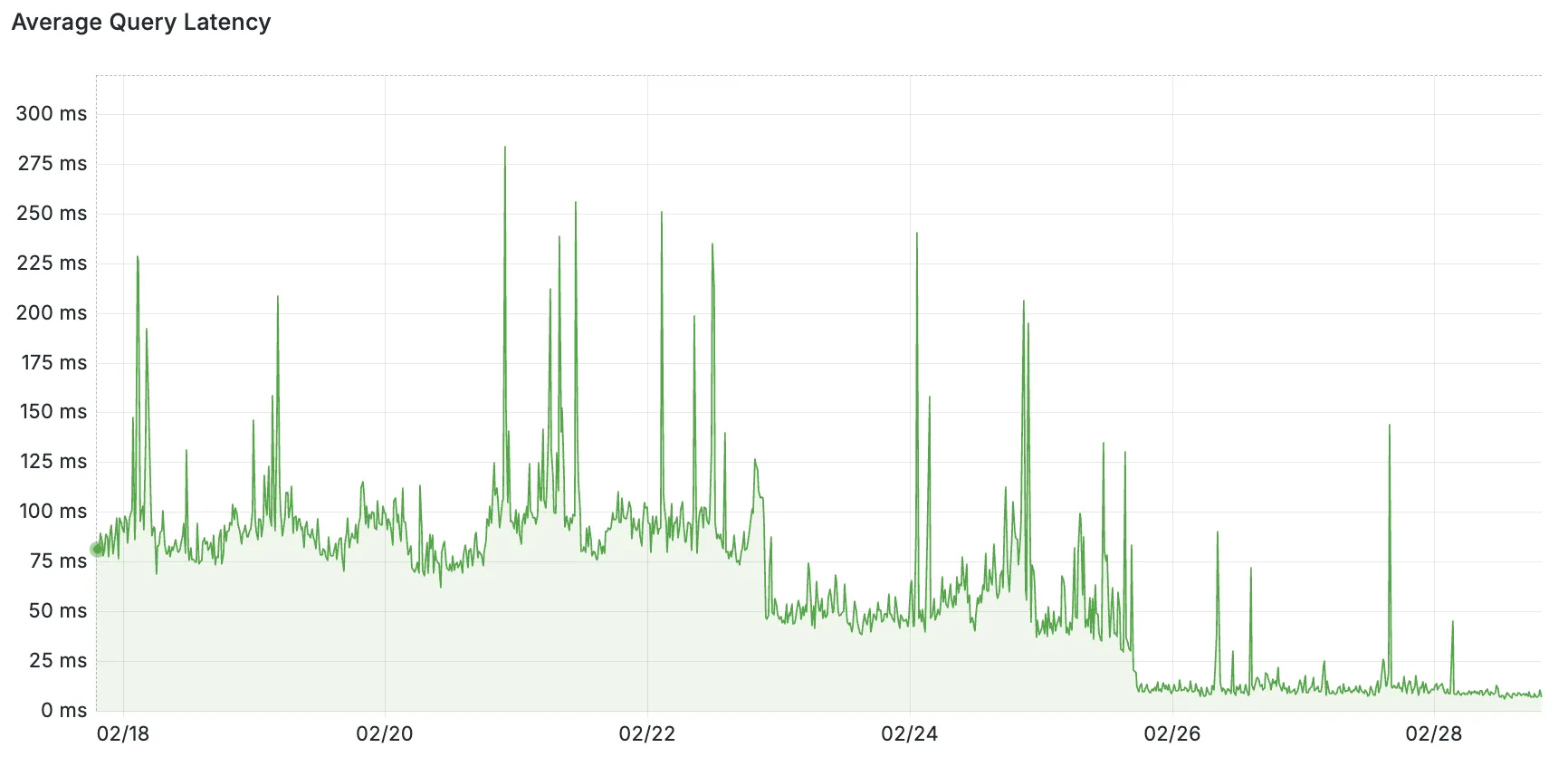

Hyperdrive now pools database connections in one or more regions close to your database. This means that your uncached queries and new database connections have up to 90% less latency as measured from connection pools.

By improving placement of Hyperdrive database connection pools, Workers' Smart Placement is now more effective when used with Hyperdrive, ensuring that your Worker can be placed as close to your database as possible.

With this update, Hyperdrive also uses Cloudflare's standard IP address ranges ↗ to connect to your database. This enables you to configure the firewall policies (IP access control lists) of your database to only allow access from Cloudflare and Hyperdrive.

Refer to documentation on how Hyperdrive makes connecting to regional databases from Cloudflare Workers fast.

This improvement is enabled on all Hyperdrive configurations.

-

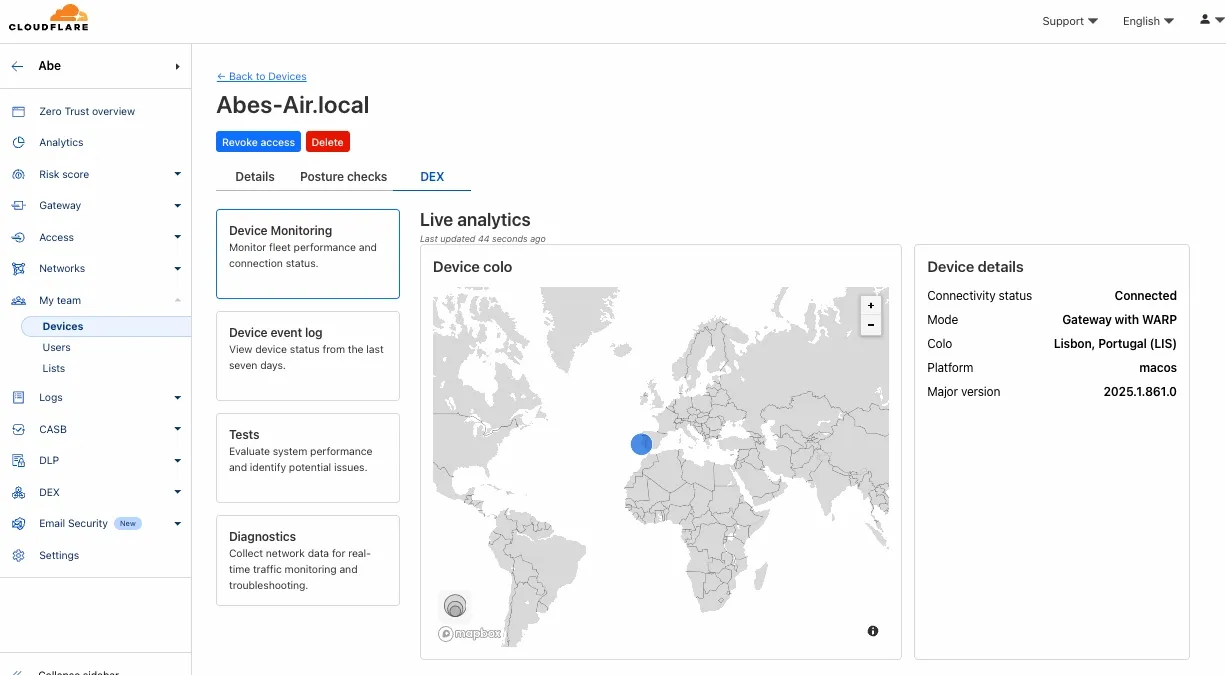

Digital Experience Monitoring (DEX) provides visibility into device, network, and application performance across your Cloudflare SASE deployment. The latest release of the Cloudflare One agent (v2025.1.861) now includes device endpoint monitoring capabilities to provide deeper visibility into end-user device performance which can be analyzed directly from the dashboard.

Device health metrics are now automatically collected, allowing administrators to:

- View the last network a user was connected to

- Monitor CPU and RAM utilization on devices

- Identify resource-intensive processes running on endpoints

This feature complements existing DEX features like synthetic application monitoring and network path visualization, creating a comprehensive troubleshooting workflow that connects application performance with device state.

For more details refer to our DEX documentation.

-

Today, we are thrilled to announce Media Transformations, a new service that brings the magic of Image Transformations to short-form video files, wherever they are stored!

For customers with a huge volume of short video — generative AI output, e-commerce product videos, social media clips, or short marketing content — uploading those assets to Stream is not always practical. Sometimes, the greatest friction to getting started was the thought of all that migrating. Customers want a simpler solution that retains their current storage strategy to deliver small, optimized MP4 files. Now you can do that with Media Transformations.

To transform a video or image, enable transformations for your zone, then make a simple request with a specially formatted URL. The result is an MP4 that can be used in an HTML video element without a player library. If your zone already has Image Transformations enabled, then it is ready to optimize videos with Media Transformations, too.

URL format https://example.com/cdn-cgi/media/<OPTIONS>/<SOURCE-VIDEO>For example, we have a short video of the mobile in Austin's office. The original is nearly 30 megabytes and wider than necessary for this layout. Consider a simple width adjustment:

Example URL https://example.com/cdn-cgi/media/width=640/<SOURCE-VIDEO>https://developers.cloudflare.com/cdn-cgi/media/width=640/https://pub-d9fcbc1abcd244c1821f38b99017347f.r2.dev/aus-mobile.mp4The result is less than 3 megabytes, properly sized, and delivered dynamically so that customers do not have to manage the creation and storage of these transformed assets.

For more information, learn about Transforming Videos.

-

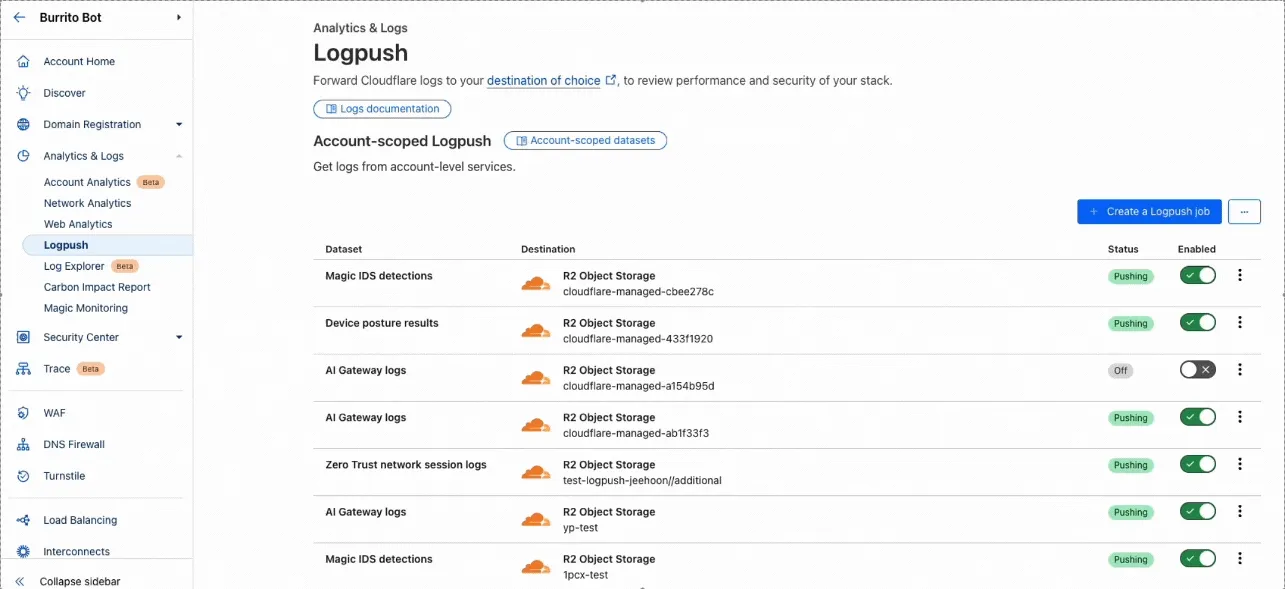

We’ve streamlined the Logpush setup process by integrating R2 bucket creation directly into the Logpush workflow!

Now, you no longer need to navigate multiple pages to manually create an R2 bucket or copy credentials. With this update, you can seamlessly configure a Logpush job to R2 in just one click, reducing friction and making setup faster and easier.

This enhancement makes it easier for customers to adopt Logpush and R2.

For more details refer to our Logs documentation.

-

You can now use bucket locks to set retention policies on your R2 buckets (or specific prefixes within your buckets) for a specified period — or indefinitely. This can help ensure compliance by protecting important data from accidental or malicious deletion.

Locks give you a few ways to ensure your objects are retained (not deleted or overwritten). You can:

- Lock objects for a specific duration, for example 90 days.

- Lock objects until a certain date, for example January 1, 2030.

- Lock objects indefinitely, until the lock is explicitly removed.

Buckets can have up to 1,000 bucket lock rules. Each rule specifies which objects it covers (via prefix) and how long those objects must remain retained.

Here are a couple of examples showing how you can configure bucket lock rules using Wrangler:

Terminal window npx wrangler r2 bucket lock add <bucket> --name 180-days-all --retention-days 180Terminal window npx wrangler r2 bucket lock add <bucket> --name indefinite-logs --prefix logs/ --retention-indefiniteFor more information on bucket locks and how to set retention policies for objects in your R2 buckets, refer to our documentation.

-

We're excited to announce that new logging capabilities for Remote Browser Isolation (RBI) through Logpush are available in Beta starting today!

With these enhanced logs, administrators can gain visibility into end user behavior in the remote browser and track blocked data extraction attempts, along with the websites that triggered them, in an isolated session.

{"AccountID": "$ACCOUNT_ID","Decision": "block","DomainName": "www.example.com","Timestamp": "2025-02-27T23:15:06Z","Type": "copy","UserID": "$USER_ID"}User Actions available:

- Copy & Paste

- Downloads & Uploads

- Printing

Learn more about how to get started with Logpush in our documentation.

-

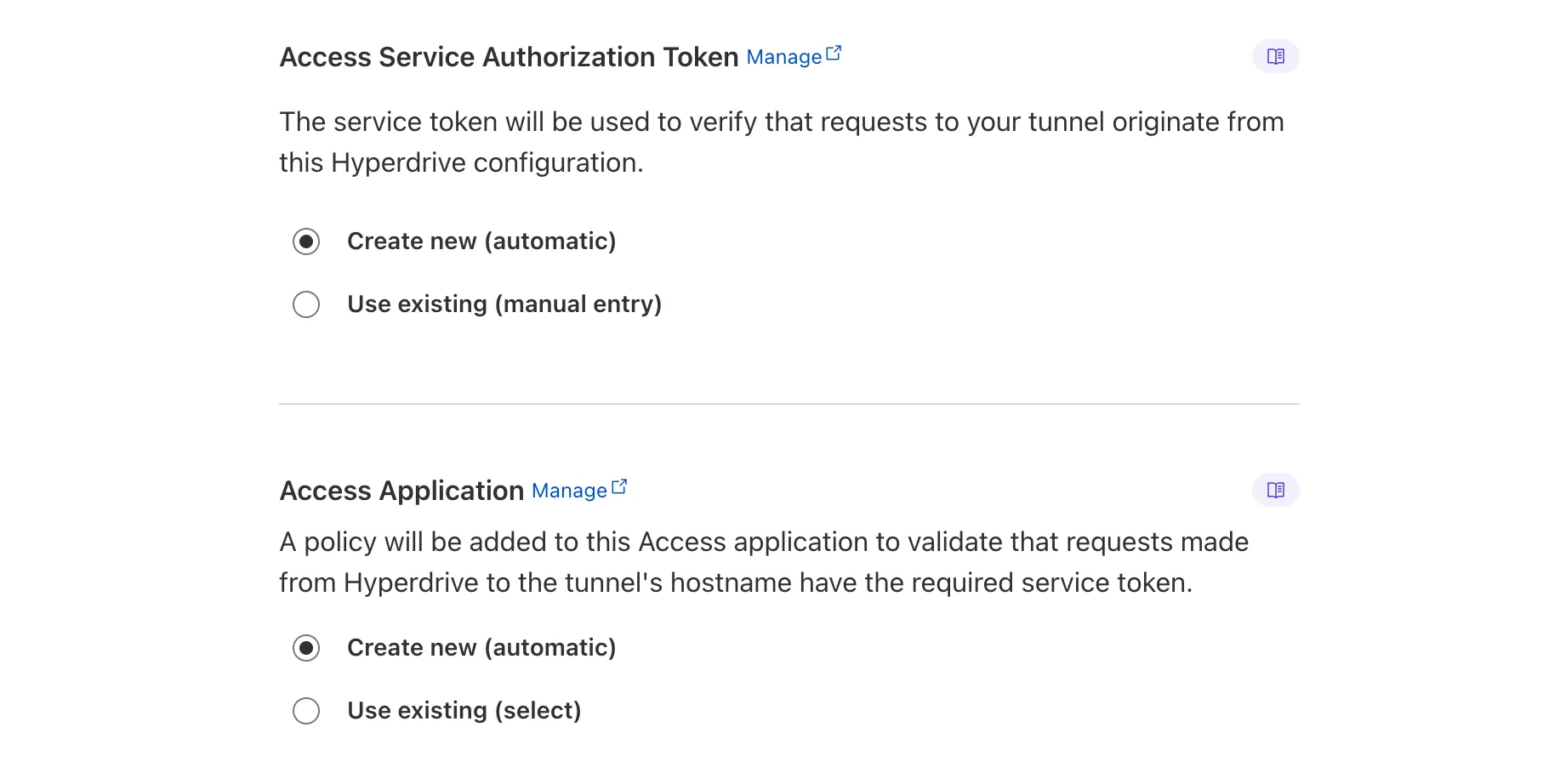

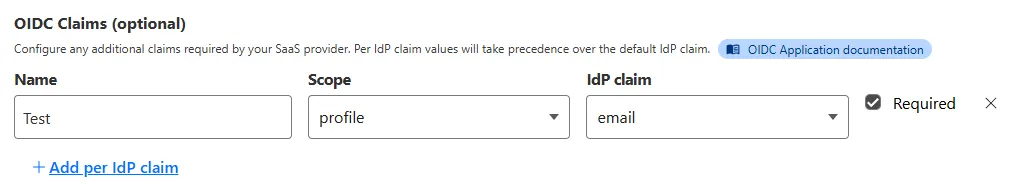

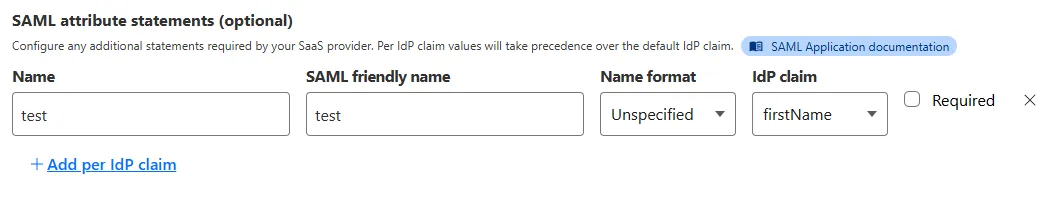

Access for SaaS applications now include more configuration options to support a wider array of SaaS applications.

OIDC apps now include:

- Group Filtering via RegEx

- OIDC Claim mapping from an IdP

- OIDC token lifetime control

- Advanced OIDC auth flows including hybrid and implicit flows

SAML apps now include improved SAML attribute mapping from an IdP.

SAML identities sent to Access applications can be fully customized using JSONata expressions. This allows admins to configure the precise identity SAML statement sent to a SaaS application.

-

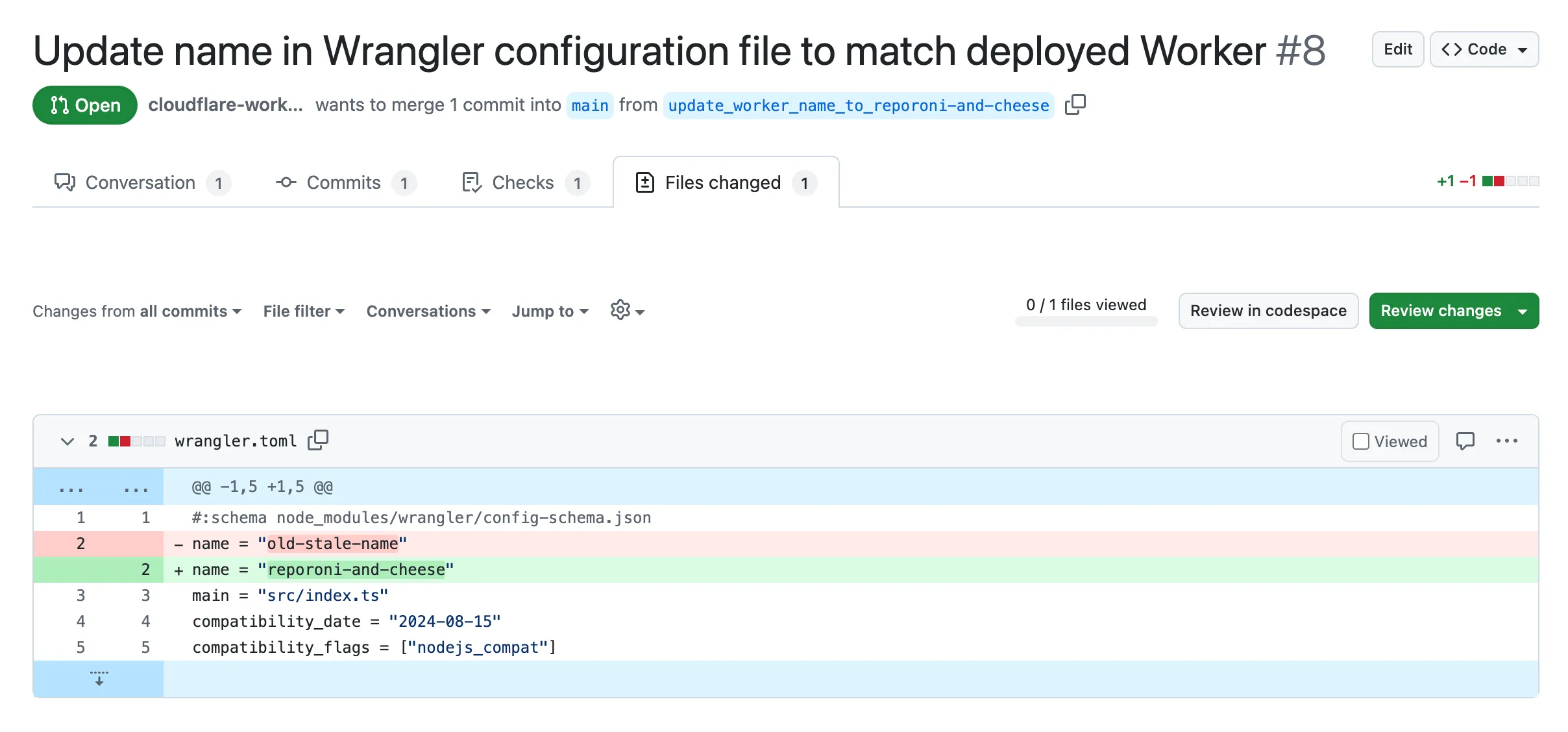

We've released a release candidate of the next major version of Wrangler, the CLI for Cloudflare Workers —

wrangler@4.0.0-rc.0.You can run the following command to install it and be one of the first to try it out:

Terminal window npm i wrangler@v4-rcTerminal window pnpm add wrangler@v4-rcTerminal window yarn add wrangler@v4-rcUnlike previous major versions of Wrangler, which were foundational rewrites ↗ and rearchitectures ↗ — Version 4 of Wrangler includes a much smaller set of changes. If you use Wrangler today, your workflow is very unlikely to change. Before we release Wrangler v4 and advance past the release candidate stage, we'll share a detailed migration guide in the Workers developer docs. But for the vast majority of cases, you won't need to do anything to migrate — things will just work as they do today. We are sharing this release candidate in advance of the official release of v4, so that you can try it out early and share feedback.

Version 4 of Wrangler updates the version of esbuild ↗ that Wrangler uses internally, allowing you to use modern JavaScript language features, including:

The

usingkeyword from the Explicit Resource Management standard makes it easier to work with the JavaScript-native RPC system built into Workers. This means that when you obtain a stub, you can ensure that it is automatically disposed when you exit scope it was created in:function sendEmail(id, message) {using user = await env.USER_SERVICE.findUser(id);await user.sendEmail(message);// user[Symbol.dispose]() is implicitly called at the end of the scope.}Import attributes ↗ allow you to denote the type or other attributes of the module that your code imports. For example, you can import a JSON module, using the following syntax:

import data from "./data.json" with { type: "json" };All commands that access resources (for example,

wrangler kv,wrangler r2,wrangler d1) now access local datastores by default, ensuring consistent behavior.Moving forward, the active, maintenance, and current versions of Node.js ↗ will be officially supported by Wrangler. This means the minimum officially supported version of Node.js you must have installed for Wrangler v4 will be Node.js v18 or later. This policy mirrors how many other packages and CLIs support older versions of Node.js, and ensures that as long as you are using a version of Node.js that the Node.js project itself supports, this will be supported by Wrangler as well.

All previously deprecated features in Wrangler v2 ↗ and in Wrangler v3 ↗ have now been removed. Additionally, the following features that were deprecated during the Wrangler v3 release have been removed:

- Legacy Assets (using

wrangler dev/deploy --legacy-assetsor thelegacy_assetsconfig file property). Instead, we recommend you migrate to Workers assets ↗. - Legacy Node.js compatibility (using

wrangler dev/deploy --node-compator thenode_compatconfig file property). Instead, use thenodejs_compatcompatibility flag ↗. This includes the functionality from legacynode_compatpolyfills and natively implemented Node.js APIs. wrangler version. Instead, usewrangler --versionto check the current version of Wrangler.getBindingsProxy()(viaimport { getBindingsProxy } from "wrangler"). Instead, use thegetPlatformProxy()API ↗, which takes exactly the same arguments.usage_model. This no longer has any effect, after the rollout of Workers Standard Pricing ↗.

We'd love your feedback! If you find a bug or hit a roadblock when upgrading to Wrangler v4, open an issue on the

cloudflare/workers-sdkrepository on GitHub ↗. - Legacy Assets (using

Was this helpful?

- Resources

- API

- New to Cloudflare?

- Products

- Sponsorships

- Open Source

- Support

- Help Center

- System Status

- Compliance

- GDPR

- Company

- cloudflare.com

- Our team

- Careers

- 2025 Cloudflare, Inc.

- Privacy Policy

- Terms of Use

- Report Security Issues

- Trademark